Table of Contents

Understanding Caching in Software Design: A Complete Beginner’s Guide

Let me walk you through one of the most powerful concepts in software design—caching. By the end of this post, you’ll understand exactly what caching is, why it matters, and how different caching strategies work.

What Does Caching Mean in Software Development?

Think of caching as keeping a copy of something you use frequently right at your fingertips instead of walking to the storage room every time you need it.

In software terms, caching is the practice of storing copies of data locally or externally for faster retrieval in the future. It’s like keeping your favorite books on your desk rather than fetching them from the library each time you want to read

Why Do We Need Caching? Understanding the Problem

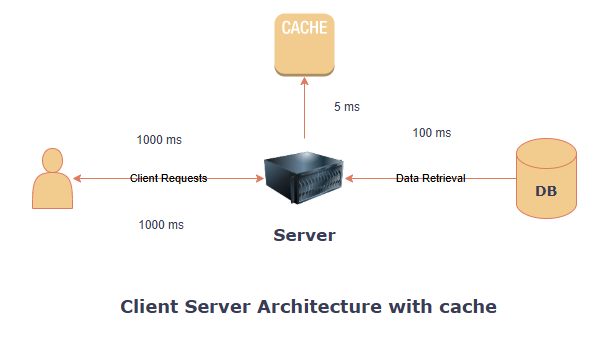

Let me explain how this works in a typical client-server setup:

When you make a request to a server asking for data or information, the server has to reach out to the database to fetch that information. Now, imagine if the server had to make this database trip every single time someone requested the same or similar information. This creates two major issues:

- Increased latency – Users experience delays and slow response times

- Database overload – Your database gets bombarded with redundant calls

This is where caching comes to the rescue! By storing frequently accessed data in the server’s local memory, we can retrieve it much faster without constantly querying the database.

Understanding Cache Hits and Cache Misses

Before we dive into different caching strategies, let’s learn two important terms:

- Cache Hit: When a request comes in and the data is found in the cache, it’s served directly from there—lightning fast!

- Cache Miss: When the requested data isn’t found in the cache, the system must fetch it from the database

Now, let’s explore the different types of caching strategies based on where the cache is placed.

Types of Caching Strategies You Should Know

1. Application Server Cache (Server-Side Caching)

Let me introduce you to the most common caching approach—application server caching, also called server-side caching.

Here’s how it works: The cache lives right on your server, storing the most frequently used data. This dramatically improves performance and reduces the load on your servers.

The workflow is simple:

- A request arrives at your server

- The server checks the cache first

- If the data exists (Cache Hit), it’s served immediately from the cache

- If not (Cache Miss), the server fetches it from the database

Popular tools you can use: Redis and Memcached are the industry favorites for server-side caching.

2. Distributed Cache: Scaling Your Caching Solution

As your application grows, you’ll need something more robust. That’s where distributed caching comes in—think of it as the advanced version of basic caching.

Instead of relying on a single cache (which creates a single point of failure), distributed caching uses multiple interconnected cache nodes working together.

Here’s what distributed caching solves for you:

- Eliminates Single point of failure – If one cache node goes down, others keep working

- Handles high traffic loads – Multiple nodes share the workload, speeding up your caching solution even under heavy traffic

Popular distributed cache tools: Redis Cluster, Hazelcast, and Amazon ElastiCache are excellent choices.

3. Global Cache: One Unified Cache for All Requests

Imagine having one central cache that serves all requests across your entire system—that’s a global cache.

Here’s what makes it special: The global cache is uniform across all requests. When data is added to any server node, it automatically gets replicated into the global cache, ensuring consistency throughout your system.

4. CDN (Content Delivery Network): Location-Based Caching

Now, let me teach you about one of the most fascinating caching strategies—Content Delivery Networks or CDNs.

Understanding the distance problem:

Imagine you have a server in India, and a user in the United States makes a request. The physical distance creates significant latency—the data has to travel thousands of miles! The same problem occurs in reverse or with any other distant client-server combination.

How CDNs solve this:

CDNs consist of strategically placed caches and servers around the world that deliver data based on the user’s geographic location. When someone in the US requests data, it’s served from a nearby US server rather than traveling all the way from India.

Bonus benefit: CDNs also help you comply with local data regulations that require data to stay within specific geographic boundaries.

Key Benefits of Implementing Caching

Let me summarize why caching is essential for modern applications:

- Reduces network calls – Fewer trips to the database mean less network traffic

- Minimizes latency – Users get faster response times and better experiences

- Decreases server load – Your servers can handle more requests without breaking a sweat

- Improves data availability – Cached data is readily available, increasing system reliability

Your Next Steps

Now that you understand caching and its different strategies, you can start thinking about which approach fits your application best. Start simple with server-side caching, and scale up to distributed caching or CDNs as your needs grow.

Remember: Good caching strategy is about choosing the right tool for your specific use case, not just implementing the most advanced solution.

Leave a Reply to Microservices Architecture – The Devops Guy Cancel reply